How to Avoid 5 Types of Cognitive Bias in User Research

Mitigating Cognitive Biases in User Research for Superior Product Design

Unveiling and addressing cognitive biases in user research is paramount to crafting digital products that genuinely resonate with users. Counteract these prevalent biases to ensure that design alterations align with the authentic needs of your customers, facilitating the creation of products that garner genuine user satisfaction.

By CLARA

Navigating Cognitive Biases in User Research for Optimal Design Outcomes

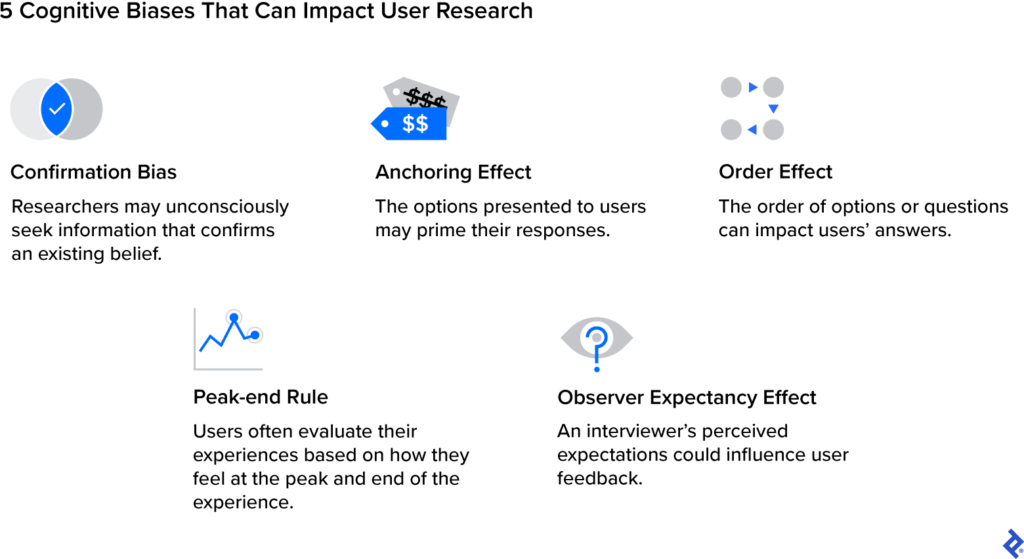

While designers acknowledge the pivotal role of user research in crafting an exceptional user experience, the impact of cognitive biases on research outcomes remains a critical concern. Cognitive biases, inherent mental shortcuts affecting information interpretation and decision-making, can subtly undermine the effectiveness of digital products. Many individuals, despite their susceptibility to biases, may not be fully conscious of their influence, leading to a bias blind spot where people believe they are less biased than their peers.

Leveraging seven years of experience in surveying and collecting user feedback, I’ve encountered various ways cognitive biases can distort results and sway design decisions. By cultivating awareness of these biases and implementing strategies to mitigate them, designers can conduct research that authentically reflects user needs. This, in turn, informs design solutions that genuinely enhance a product’s design and serve the customer more effectively. In this article, I delve into five types of cognitive biases in user research and present actionable steps for designers to mitigate these biases, fostering the creation of more successful products.

Confirmation Bias: Curating Evidence to Align with Preexisting Beliefs

Confirmation bias manifests as the inclination to seek information that validates preexisting beliefs, disregarding facts that contradict these viewpoints. In the realm of user research, this bias can significantly impact the interpretation of data and compromise the objectivity of the findings. In this context, it becomes imperative for designers to recognize and counter confirmation bias to ensure that their research outcomes remain unbiased and reflective of the diverse perspectives of users, confirmation bias may manifest itself as designers prioritizing feedback that affirms their own opinions about a design and disregarding constructive feedback they disagree with. This approach will naturally lead to design solutions that don’t adequately address users’ problems.

I observed this bias in play during a recent collaboration with a design team, where we gathered user feedback on a software development company’s website. Despite multiple participants expressing a preference for a shorter onboarding process, I found myself surprised by this perspective, considering it contrary to what I initially believed to be an intuitive approach. Rather than giving due attention to this feedback, I unintentionally prioritized comments that centered around topics other than onboarding, such as the placement of a button or concerns about a distracting color scheme. Recognizing and mitigating such biases is essential to ensuring a well-rounded and unbiased interpretation of user feedback.

Only upon our team’s thorough analysis of feedback using an affinity diagram—an organized compilation of notes grouped by shared themes—that the substantial volume of concerns related to onboarding became evident. It was at this point that I acknowledged my bias for what it was.

To address the onboarding issue, we streamlined the on-screen questions, relocating some to a later step in the process. Subsequent user tests affirmed that the revised approach indeed felt shorter and smoother for users. The utilization of affinity mapping proved invaluable in mitigating the risk of disproportionately focusing on one aspect of user feedback, encouraging us to visualize and consider all data points.

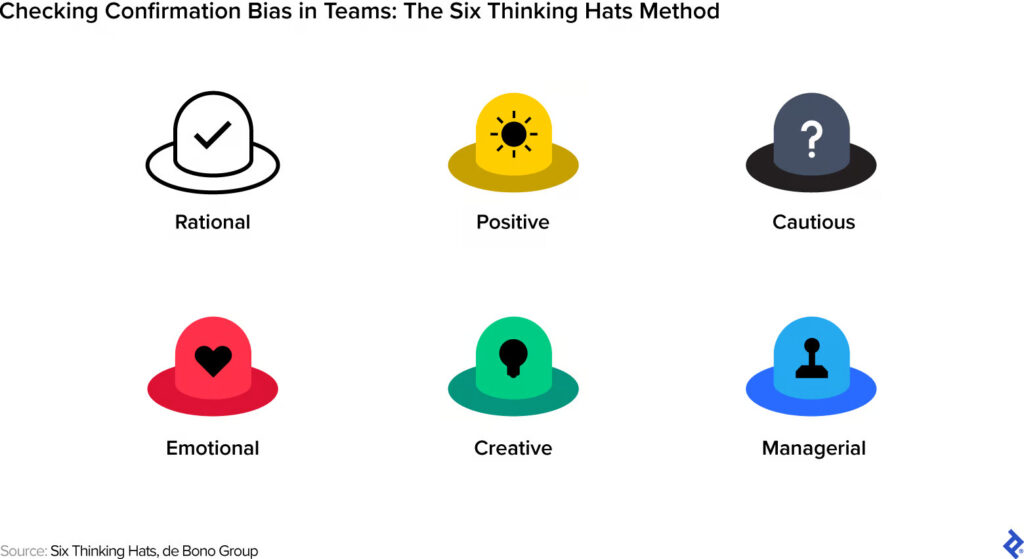

Another effective method in combating confirmation bias is the Six Thinking Hats, a technique pioneered by the de Bono Group. This method involves assigning each team member one of six different personas during user research, each represented by a distinct color hat—rational, positive, cautious, emotional, creative, and managerial. For instance, when the team leader designates a member to wear the green “creative” hat during a brainstorming session, that individual is tasked with presenting innovative solutions and unique perspectives. Meanwhile, the team member donning the blue “managerial” hat is responsible for observing and adhering to the de Bono methodology guidelines. The Six Thinking Hats method establishes a checks-and-balances approach, enabling team members to identify and counteract each other’s errors, effectively combating cognitive biases.

Anchoring Effect: Influence on Feedback Skewing Based on Provided Options

The anchoring effect manifests when the initial piece of information a person learns about a situation shapes the decision-making process. This cognitive bias significantly impacts various choices in daily life, such as perceiving a discounted item as a good deal, even if the reduced price exceeds the original budget.

In the realm of user research, anchoring can intentionally or unintentionally sway the feedback users provide. Consider a multiple-choice question asking users to estimate the time required to complete a task—presenting specific options may constrain their thinking, leading them to choose a higher or lower estimate than they might have otherwise given. This effect becomes pronounced when questionnaires inquire about quantities, measurements, or other numeric values.

To mitigate the adverse effects of anchoring, careful word choice and presentation of options are essential. For questions related to specific metrics, allowing users to input their estimates, rather than presenting predefined options, can be beneficial. When options are necessary, employing numeric ranges is advisable.

Recognizing that anchoring also influences qualitative feedback, it’s prudent to avoid leading questions that set the tone for subsequent responses. Instead of inquiring, “How easy is this feature to use?” prompt users to describe their experience using the feature without suggestive language.