Navigating Debugging Challenges: An Insight into Jobswifi Engineers’ Approach

JObswifi’s Talent Portal, the pivotal web application facilitating talent access to the network and job opportunities, stands as a cornerstone software service delivering substantial business value. The role of an engineer working on this platform is both high-stakes and fast-paced, requiring a commitment to ensuring optimal user and developer experiences. In a continuous pursuit of excellence, we embarked on a transformative journey by replacing the outdated portal with an upgraded platform and a new codebase, deploying updates to production multiple times a day to maintain agility.

Nevertheless, as we harnessed the power to rapidly deliver enhancements, we recognized the corresponding responsibility for meticulous code maintenance. The introduction of new code inherently brings forth new challenges in the form of bugs, demanding swift resolution to sustain seamless updates to production. This reality came to the forefront when I recently confronted a Cypress test failure that not only obstructed our entire delivery pipeline but also disrupted the workflow of numerous engineers.

Rather than viewing this as a setback, my team embraced the opportunity to turn this challenge into a valuable learning experience. The encountered bug served as a catalyst for delving deep into our testing framework, understanding its intricacies, and ultimately enhancing our project’s infrastructure. The collaborative effort not only addressed the immediate issue but also positioned our team to proactively handle similar challenges in the future, fostering a culture of continuous improvement and resilience within our engineering endeavours.

An In-Depth Exploration of Jobswifi’s Engineering Initiatives: The Portal Site

To gain insights into the intricacies of engineering projects at Jobswifi, we’ll delve into the workings of our updated Talent Portal site. This examination will shed light on the technologies, collaborative partnerships, and testing standards that typically play a crucial role in shaping and enhancing our projects.

What Technologies Drive Our Operations?

Jobswifi engineers are adept at embracing cutting-edge technologies, and the Talent Portal stands as a prime example of this commitment. In our previous development framework, we employed jQuery and Backbone.js for data management. However, our revamped site has embraced GraphQL and Apollo Client to enhance the developer experience. This combination is widely utilized across various teams at Toptal for its efficiency in data management. GraphQL, renowned for its speed, type-safety features, and adaptability with various data sources, serves as the backbone for accessing information critical to rendering user interfaces within the Talent Portal. Apollo Client complements GraphQL, providing intelligent data caching for an even more streamlined data management experience. Beyond data development, the UI development landscape underwent a transformation. The shift from Ruby and JavaScript to React not only enhances our code maintainability but also attracts a growing community of React developers due to its clean repository.

Strategic Partnerships for Quality Assurance

Given the profound impact our platforms have on the professional lives of clients and freelance talent, ensuring high product quality is imperative. Collaboration with dedicated quality assurance (QA) engineers, seamlessly integrated into the product team, and beta testers is a cornerstone of our approach. Prioritizing product quality over speed, we subjected the Talent Portal to a beta testing phase, sharing the new site with a select group of testers. The iterative improvements made based on their valuable feedback underscore our commitment to stable and effective releases.

To maintain stability during our rapid product update cycles, we deploy automated tests and comprehensive test cases, meticulously curated by our QA engineers. Automated end-to-end tests, powered by the Cypress testing framework, play a crucial role in validating our projects. The browser-based nature of Cypress allows us to cover cases that may be challenging for other environments, ensuring a robust testing infrastructure.

A Commitment to Testing Standards

Testing is integral to Jobswifi engineering ethos, acting as a linchpin for the reliability of our products. The Talent Portal, alongside other teams, employs automated end-to-end tests using the Cypress testing framework. Running these tests before finalizing product changes is a standard practice, ensuring the stability of our main code branch. When a test fails on the main branch, it serves as an indicator of potential flakiness, prompting immediate attention.

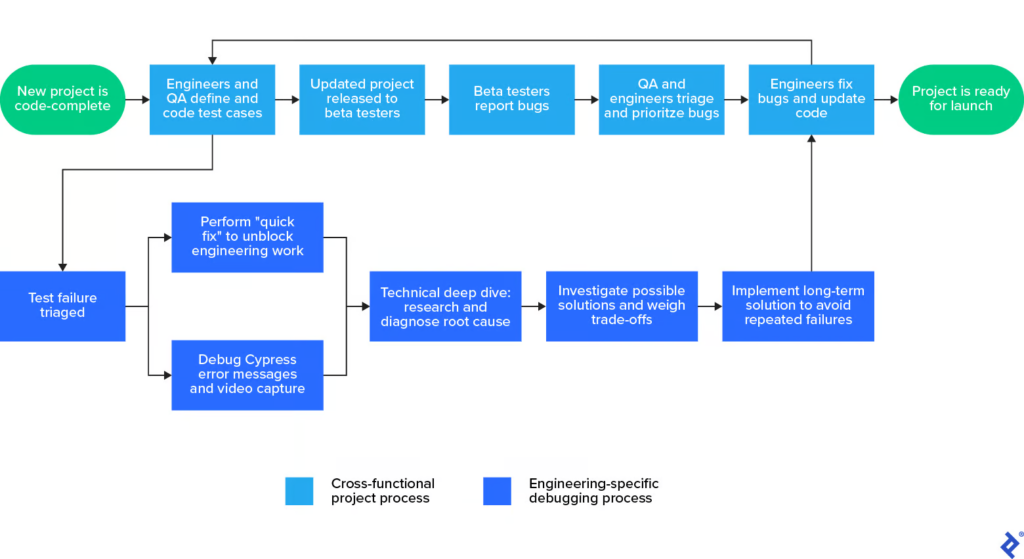

Addressing test failures involves a systematic approach. Automated notifications are dispatched to the relevant team, adhering to code ownership rules. For cases where ownership is unclear, dedicated QA or project engineers step in to pinpoint the responsible team. This collaborative effort continues until the project achieves stability, involving bug fixes and iterations on test cases.

Navigating the Debugging Process: A Case Study

In the intricate landscape of engineering projects, bugs are inevitable. The debugging process is a crucial facet of ensuring the reliability and performance of our applications. To offer a glimpse into how Jobswifi engineers tackle bugs effectively, let’s explore a case study involving a Cypress test failure and the subsequent debugging journey.

Unraveling the Root Cause: A Cypress Test Failure

Picture this: A notification arrives, signaling a Cypress test failure on the main branch of our Talent Portal. This is not just a routine glitch; a failing test acts as a roadblock in our delivery pipeline, disrupting the workflow of engineers. Immediate action is imperative. While quick fixes may unblock the pipeline temporarily, understanding the root cause becomes paramount for long-term stability and improvement.

The day in question marked the discovery of a failure caused by Apollo Client overfetching. The triage system swiftly alerted our team, and the urgency to address the issue and prevent its recurrence was palpable. The bug warranted immediate attention not only for its resolution but also for the insights it could provide into our testing framework, infrastructure enhancements, and knowledge sharing with other teams facing similar challenges.

Upon receiving the initial error message indicating an unreachable element, our investigation began. The recorded video of the test execution revealed a puzzling scenario: the element, a button in this instance, appeared visible on the page. The mystery deepened until we discerned the presence of two copies of the button element:

- The instance referenced by Cypress is currently detached from the page.

- A new copy of the button is visible on the page.’

A Brief Interlude: Understanding Apollo Client Caching

To address the overfetching intricacy, let’s delve into the intricacies of the Apollo Client caching system. A nuanced comprehension of this caching mechanism equips us to navigate potential solutions effectively.

The Apollo Client’s cache stands as a linchpin in maintaining accessible and consistent data. It achieves this by storing the outcomes of previous queries in a local cache, ensuring swift access to data when identical queries are encountered. However, it’s imperative to acknowledge that the Apollo Client’s cache is not immune to challenges, particularly when dealing with duplicate network requests.

To illustrate this scenario, let’s explore a simplified example, avoiding the unnecessary complexities of our full project queries. In this instance, our Content function initiates two queries using the useQuery hooks. However, upon inspecting our debug log, an anomaly emerges – three operations are logged: DataForList1, DataForList2, and a repeated DataForList1. Let’s scrutinize the queries involved:

const query1 = gql`

query DataForList1 {

articles {

id

title

tags {

name

color

}

}

}

`;

const query2 = gql`

query DataForList2 {

articles {

id

title

tags {

name

articlesCount

}

}

}

`;{

tags: [{name: 'HTML', articlesCount: 10}, {name: 'JS', articlesCount: 12}, {name: 'PHP', articlesCount: 6}]

}Resolving the Query Conundrum

The queries in question exhibit similarities, focusing on selecting identical fields from the article type. However, their distinctions emerge in the selections made from the tag type. In our setup, the absence of an id field for the tag type prompts Apollo Client to store tags in a denormalized manner, treating them as properties of the articles. Given that the tags field is an array, Apollo Client’s default merge strategy involves rewriting data. Upon the initial data reception for the first query, Apollo Client populates the article’s cached tags with:

{

tags: [{name: 'HTML', color: 'blue'}, {name: 'JS', color: 'yellow'}, {name: 'PHP', color: 'red'}]

}Following the receipt of data for the second query, the cached tags for the article undergo overwriting:

{

tags: [{name: 'HTML', articlesCount: 10}, {name: 'JS', articlesCount: 12}, {name: 'PHP', articlesCount: 6}]

}Consequently, the cache of Apollo Client loses the information regarding the colors of the tags and initiates an extra HTTP request to fetch them. In this particular example, Apollo Client halts its operations because the duplicated query’s data matches that of the initial query. However, if we were dealing with dynamic values such as counters, timestamps, or dates, the client might enter an endless loop, with the two queries perpetually triggering each other.